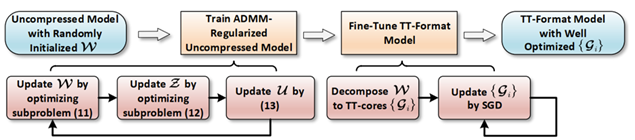

Figure: Procedure of the Compression Framework using ADMM for a TT-format DNN Model.

Invention Summary:

Deep Neural Networks (DNNs) are widely used in different computer vision tasks. Large-size DNN models cause high storage and computational demands and many DNN model compression methods, such as tensor decomposition are used to reduce it. However, compression of Convolutional Neural Network (CNN) using Tensor Train (TT) based decomposition causes significant loss of accuracy.

Researchers at Rutgers University developed a method of TT-based model compression by applying Alternating Direction Method of Multipliers (ADMM) that causes minimal loss of accuracy. In this process, they train the DNN model in its original structure, decompose the uncompressed model to TT format, and then fine-tune it to get high accuracy. The framework is very general, and it works for both CNNs and RNNs and can be easily modified to fit other tensor decomposition approaches.

The framework was evaluated on different DNN models for image classification and video recognition tasks. Experimental results show that the ADMM-based TT-format models demonstrate very high compression performance with high accuracy. Notably, on CIFAR-100, with 2.3ˆ and 2.4ˆ compression ratios, the models have 1.96% and 2.21% higher top-1 accuracy than the original ResNet-20 and ResNet-32, respectively. For compressing ResNet-18 on ImageNet, the model achieves 2.47ˆ FLOPs reduction without accuracy loss.

Market Applications: Neural networks-based chip sets can be used in embedded and Internet-of-Things (IoT) systems such as:

- Smart phones

- Smart home security systems

- Wearable health monitors

- Ultra-high speed wireless internet etc.

Advantages:

- High Area and Energy Efficiency

- High Compression Ratio

- High Accuracy

- User-friendly

- Applicable using other tensor decomposition approaches, such as Tensor Ring (TR), Block-term (BT), Tucker, etc.

Intellectual Property & Development Status: Patent pending. Available for licensing and/or collaboration.

Publication: